SEO Guides, Tips & More!

Learn from Our Experience

What is the Google BERT?

Just as accounting firms work better when departments don’t operate in silos, search queries also work better when the algorithms don’t isolate single words. The rise in longtail keywords and voice search have made people hyper-aware of challenges like relevancy and natural language searches and their impact on the quality of search results. Relevance is subjective, and search engines can’t interpret the idiosyncrasies of our natural language. Up until now, that is.

Google’s BERT algorithm, which was rolled out in October 2019, takes into consideration a search query’s:

- Entire search phrase, not just isolated words

- Contextual meaning

- Nuances of the entire search term

- Relevance of the entire search term

This is one of the biggest updates to Google’s search engine algorithms and BERT’s impact on search queries is significant. BERT is currently being used on about ten percent of all Google searches, which doesn’t sound like much except that Google handles about five billion Google searches every day. That’s about 500 million daily search terms that will now be better understood and redirected to more appropriate results.

Why Is BERT a Big Deal?

At any given time, around 15 percent of all searches on Google are brand new, and the overwhelming majority of queries are fully formed questions and longtail keywords. That makes the task of analyzing what users really want tremendously difficult. We type in a full question in Google and expect it to understand as if we’re asking another human and return exact search results within a fraction of a second. Existing technology and algorithm updates couldn’t maintain pace with the fast-changing nature of how we use Google.

What BERT does, according to Google, is “[improve] language understanding, particularly for more natural language/conversational queries.” BERT’s algorithm narrows in on search terms that are complex and depend on context to understand. It analyzes the nuances in phrasing and takes into account prepositional searches that rely on words like ‘how’ and ‘to.’

Previous algorithm updates, like Hummingbird in 2013 and RankBrain in 2015, began to form neural networks to better respond to new search queries and improve search results. They were both limited in analyzing the entire query or considering the impact of how one word could change the whole meaning, as in the example below from Google’s own evaluations on parking without a curb.

Notice in the first example, on the left, the search results are the opposite of what the user intended. With BERT, the search results take into consideration the word ‘no’ – which changes the entire meaning and purpose of the search. The snippet that’s displayed first is more exact and relevant to what the user wanted to know.

What’s really unique about BERT is its bi-directional method of learning to understand language. By bi-directional, it means that BERT continues to learn by randomly masking a word within a search query, then looks to the words before and after – aka in both directions – to get at the entire query’s meaning. Here’s an example from Moz’s Whiteboard Friday series, and a link where you can read more in-depth about BERT overall.

How Does BERT Affect SEO?

First, it is not possible to optimize content for BERT. Great content is great content; more on that in a bit. What BERT does is make users’ complex queries easier to understand and then match those queries with more relevant, useful results.

BERT is being used to improve search results, so it will impact how Featured Snippets are displayed as well as search rankings.

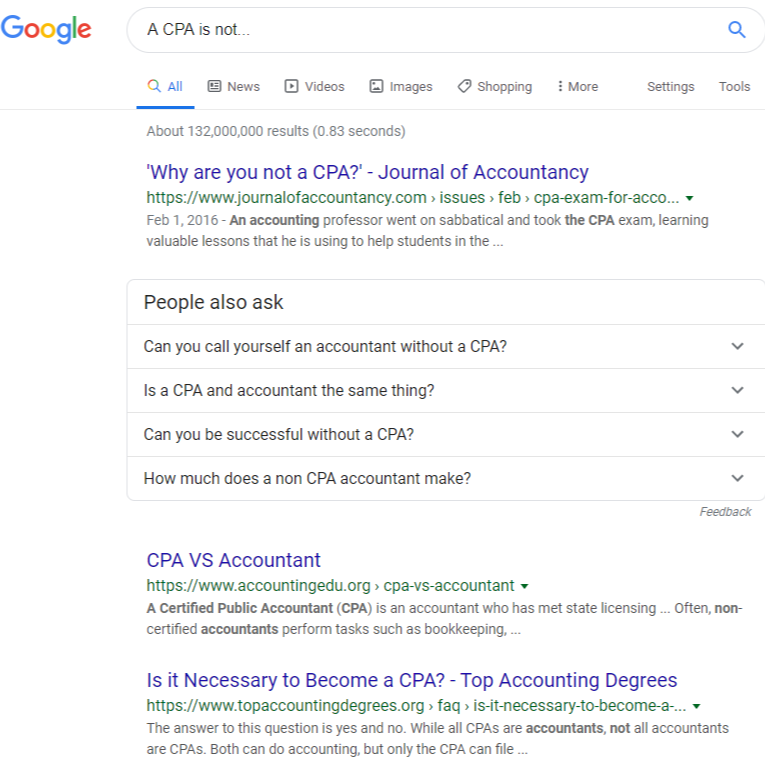

Google said this is one of the biggest updates to search in five years, but it doesn’t apply to all keywords or search terms. It’s not targeting single keywords or very short phrases with clear meanings, and it’s not as effective in understanding what something is not. For example, BERT can’t understand what things are not, so if a user types in something like “A CPA is not …” the results are less clear. A CPA is not an attorney or a financial advisor, and so on. Google’s results, instead, look like this:

SEO keyword analysis by the people over at Neil Patel indicates that BERT is mostly affecting the top of funnel keywords and phrases. When it comes to the role of user intent, these keywords and phrases tend to be informational; the user wants to learn more about something.

In addition, some websites may have been experiencing traffic fluctuations since BERT was rolled out. If this happened and BERT is the suspected cause, know that the quality of web traffic matters more than the quantity. What should happen more often now is that when a firm’s web content is displayed on page one of the Google SERP, it will be more relevant to the search query.

This should lead to overall better conversions and lower bounce rates on the website if the content is high-quality and focused on the reader. Smaller sites and newer sites may see bigger fluctuations than large or well-established sites.

Writing Great Content for Better SEO

Quality content matters and content doesn’t have to be lengthy to be valuable. Although BERT is designed to target the complicated, longtail keywords, websites still need a mix of content types to appeal to different types of users. Perhaps the biggest takeaway from BERT is the need for content to be written with a purpose.

This means that each piece of online content used for lead generation and SEO needs to answer the question “what’s the point?” Marketers should seek to achieve clarity in writing, using precise words and aiming for an organized, predictable, and enjoyable user experience. On pages with more graphics than text, utilize internal linking to increase a page’s relative importance and meaning.

Don’t optimize content specifically for BERT; it’s for search queries, not webpages. Google rewards good content. This is just another way to do that. There are some resources claiming that websites and online content should be edited to add more longtail keywords since BERT’s focus is on interpreting complex requests. Remember that there are different types of content, different types of search intent, and the best approach is to use a mix of SEO strategies across an entire website.

As BERT’s algorithm continues to be refined and is applied to more search queries, keyword density will matter less. It’s already bad practice to stuff keywords into content for the sake of adding keywords; with BERT, as long as the content is written clearly and has a purpose, Google will be able to index it easier.

To write content for SEO and the range of search algorithms Google uses, we recommend creating specific content according to user intent and types of searches. Recall from this previous Flashpoint blog post that there are four types of user intent: transactional, informational, navigational, and commercial. By creating content that appeals to users at different stages of the marketing funnel, and for different purposes, the firm can help to increase its search rankings and relevance in search results.

Approach content development from the perspective of the user:

- Who is this content for?

- What is the content’s purpose?

- What questions should the content answer?

- How will the reader use this content (search intent)?

- Why does this content matter?

Following the E-A-T guidelines for quality content is still a good idea. E-A-T, the acronym for Expertise, Authoritativeness, and Trustworthiness, is a guide, not a search algorithm, and these principles have been around longer than BERT. There are certain elements that make up a quality page – like good design and useful content – that can help a website increase its search rankings regardless of which algorithms are used. Read more about E-A-T on our blog archives.

Remember that BERT is just one algorithm of thousands that work together to bring users the most relevant, helpful search results. What makes BERT unique is that it’s a language model meant to better interpret queries that are based on full questions and complex search terms.

What comes next? Digital AI devices like Alexa and Google Home that enable users to ask direct questions will expand the role of voice search and conversational search. With BERT’s ability to interpret language nuances and contextual meaning, expect the quality and relevance of search results to improve. For marketers, this means that having clear, purposeful content that answers readers’ questions and focuses on user experience will be more important than ever.

What questions do you have about BERT and how search marketing is evolving? Ask us in the comments.