SEO Guides, Tips & More!

Learn from Our Experience

How To Make a Website Readable for AI

It’s now more important than ever to make sure that AI can read and understand websites.

AI Overviews from Google are lowering clicks by several percentage points among other metrics. This is not to mention an insight from Seer Interactive finding a 70% drop in CTR (click-through rate) when AI Overview is present on a results page and a 50% drop for the CTR of paid advertisements. Safe to say, organic clicks are in high demand these days.

Clicks are now in part being driven by users clicking on citations from AI Overviews or from AI chat bots. AI is becoming a replacement for regular search engines. As a result, websites need to account for page crawl as the main way to retain clicks.

Due to these seismic shifts in search, keeping up with best strategies is vital to remaining competitive. In the case of AI, the best that can be done currently is to make sure that a website can be read easily and properly. Strategies that boost AI readability boost ranking for search engines as well.

How Does AI Find Websites?

For ChatGPT, its developer company OpenAI secured a partnership with Bing where ChatGPT primarily uses Bing to conduct search function. Bing is explicitly mentioned in OpenAI documentation for Edu and Enterprise, special versions of ChatGPT granted to universities and businesses respectively.

There’s also a web crawler used for ChatGPT’s search functions. When a user asks ChatGPT a question that requires sources, the sources are likely pulled from the web crawler. Web crawlers are internet robots that access websites to provide information for a service, in this case ChatGPT.

Perplexity currently has its own PerplexityBot for search features, though the company is just as opaque about how exactly its search works.

Anthropic’s introduction into web search came a lot more recently, and was originally introduced only to paying users. Anthropic uses its own search tool, and some speculate that Claude is using Brave search to power its results.

The variety of similar AI models out there highlight how no AI readability solution will be one-size-fits-all. In reality, there are a number of different methods, each of which apply as broad SEO principles and help boost the odds of showing up for all AI models and search engines.

Site Crawlability

The common theme across all AI models is the use of web crawlers or search tools to power their search. AI web search is done by:

(a) accessing an already available index of web pages or existing search engine, or

(b) creating a new index of web pages through AI web crawlers.

In either case, a clear website structure that allows crawlers to access intended pages and ignore the rest is key. The measure of this is named crawlability. There are many ways to ensure a crawlable website that can be readily understood by AI. These measures will also boost readability for search engines, meaning better rankings on the results page. The strategy list below is a highlight of the best ones, but there are other smaller, technical edits that can be made to a website to boost its readability to AI.

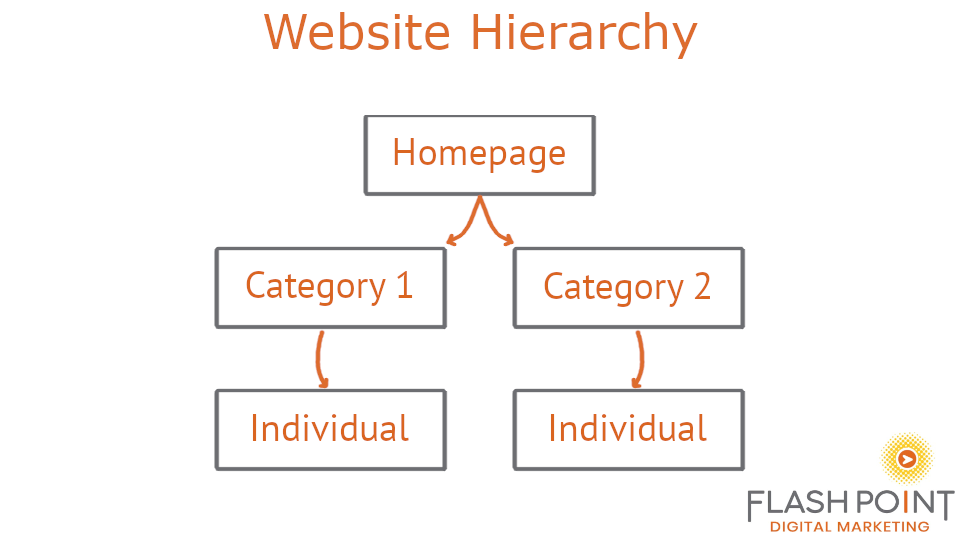

An example of how to structure a website.

The one of the most important strategies for website crawlability is good website structure. In an ideal website, the homepage splits into main categories, then subcategories, then individual pages. For example, a user could access a page about cybersecurity audits through an audits category page, itself accessed through the homepage. A structure like this allows crawlers to treat different sections of the website differently and helps distinguish which parts should be crawled or indexed first.

Alongside structure, internal self-links that are frequent and well placed serve to boost crawlability. Well placed refers to how good an internal link fits the context of where it appears.

“Well placed” can apply to page topics, such as avoiding a link about estate tax in a page about internal controls. Importantly, this also applies to the purpose of the content, such as linking to a cash-flow forecasting template in a page about succession planning. Both are related but serve different purposes. A resources tab would be a better fit for the template.

Adding JSON-LD and XML is another strategy that makes websites easier to crawl. JSON-LD and XML are simple and make data easier to understand for AI models, their web crawlers, and the web crawlers of search engines. Google documentation gives an explicit endorsement of using a JSON-LD structure to inform rich results–such as the news scroll on the results page. One example of JSON-LD is a schema markup, which will be discussed in detail in the next section.

Semantic HTML is another useful tool for boosting crawlability. Using tags like <header> or <section> instead of just <div> clearly communicates website layout when the HTML is read. For both Google and AI, main content is the point of interest on a page. Since web crawlers can only read raw HTML–they can’t physically see the page–it helps guide towards the main content.

XML sitemaps are a key example of this. An XML sitemap provides crawlers additional metadata about pages and comprehensively organizes pages with relationship to each other.

XML sitemaps most often provide just the value of metadata like last modified and importance, especially if a website is already well-linked internally. While Google itself does not recognize the <priority> or <changefreq> properties in XML sitemaps, an AI web crawler could find these useful to determine how to look for relevant content on a website.

Google in particular recommends adding a sitemap for new websites, large websites, and ones with a lot of media content. XML sitemaps are almost always generated through SEO plugins in WordPress, or otherwise generated through free tools offered by SEO services.

Another thing is a website’s robots.txt file. This is critical for AI access as AI can turn away if it’s not properly set. In WordPress, this is accessed through plugins that allow editing the robots.txt file. The format of robots.txt is very simple, and in their base form will just show:

User-agent: *

But a specific block on something may appear, such as:

User-agent: GPTBot Disallow: /

In this case, ChatGPT can’t access a website if it looks for the answer to a user’s question(s). Removing the slash creates an exception to allow in case it would be otherwise disallowed. If the robots.txt page looks like the top example, then it is normally perfectly fine for AI and any web crawler will be able to access the website.

It’s also possible to block the web crawler that uses the website for training models while allowing the one that permits search results to be accessed to provide sources for user inquiry. The specific User-agent names for OpenAI can be found here, the ones for perplexity here, and the ones for anthropic here.

It’s important to note that Cloudflare has its own settings to block AI web crawlers which is enabled by default. To disable this setting, go to Cloudflare dashboard > AI Crawl Control > Crawlers. Further information can be found in Cloudflare’s documentation.

Sitemap: https://www.example.com/sitemap.xml

Lastly, the previously mentioned XML sitemap can be attached to robots.txt using sitemap. This is useful for directly indicating the sitemap to web crawlers, as most read robots.txt.

Ensuring proper placement of rel attributes is another way to tell web crawlers where to go and where not to go.

First, nofollow/sponsored/ugc are tags that can be applied to links in HTML. Nofollow was historically used to exclude external links from counting for Google’s PageRank, however, nowadays it serves as a way to reject implying “any type of endorsement” with a link. Sponsored tags are for links that were bought, and ugc for user-generated page content like comments.

These tags were introduced by Google but have since become universal link signals followed by most web crawlers. All three are link attributes present in HTML and modified through direct editing of HTML, or in the case of WordPress, in the Gutenberg editor or plugins.

Lastly, crawlability is heavily affected by how well a website runs. If there are links to error pages, bad load times, redirect loops or orphan pages then both AI and search engines might consider the website lower quality and/or less trustworthy.

A 200 successful request should never appear like an error page on any website. The code indicates a successfully loaded page and will be treated as such, including being indexed fully by Google. In this same sense, making a content page that says “not found” can cause problems.

If there are repeated 404 errors, it can cause issues for web crawlers by making the website appear to be majority error pages. This especially happens when URL generation is used as a means of customized pages.

These strategies may seem complicated, but they are easier to manage with SEO tools/addons/plugins. They usually come with automatic generation of XML sitemaps or robots.txt. Platforms like WordPress have several well-established plugins with many features, which can help reduce the burden of managing every last detail of each page.

In addition to direct crawlability measures, the overall experience for a page is a huge factor in both search engine rankings and AI accessibility.

One of the main page experience factors is load times/responsiveness. If a website loads too slow, then web crawlers will effectively give up crawling sooner. This difference is especially noticeable for really large websites. Though, it can also happen in websites with poor coding design.

Mobile-friendliness is another big one. As AI draws from Google results, then logically their policy of mobile-first indexing plays a large role in AI result visibility. Most of the time a website will look good on mobile from the HTML side without additional action. However, websites can display incorrectly and content can get cut off.

In the case of WordPress, some themes are worse for mobile devices, and too many plugins can end up bloating a website and worsening responsiveness. Otherwise, WordPress works well on mobile.

Schema Markups/Structured Data

Among actions taken to enhance crawlability, schema markups stand out as the most in-depth. They are essentially extra descriptors of website elements for search engines and other robots. In this case, AI. In WordPress, schema markups can be added either through general SEO plugins or dedicated ‘structured data’ ones. Each plugin has its own way of doing schema markups.

Schema markups provide context for interactive or dynamic features. They also describe the relationship of elements and disambiguate when HTML fails to clarify. Schema markups allow an AI to “see” a page like a person might. There are many types of schemas that can be added.

Common among almost every schema structure are a few tags. @context defines the reference for how to interpret the rest, and is usually set to “https://schema.org”. @type is category, and the url of the page is included to help anchor the schema.

If a website contains a built-in search bar, AI can access it, but can only guess how the search bar works: what tags are used, what separators, how to exclude or include results.

In this case, SearchAction Schema is a helpful way to offer more information for using the search bar. This is especially useful when the integrated search bar is not important enough to have on-page documentation. SearchAction appears like the following:

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "WebSite",

"url": "https://www.example.com/",

"potentialAction": {

"@type": "SearchAction",

"target": "https://www.example.com/search?q={search_term_string}",

"query-input": "required name=search_term_string"

}

}

</script>For the structure of a search’s url, target is used. Then query-input defines a list of terms found in target and notes if they are optional or required. Anything can be added to query-input in a list as long as the target url has a valid spot in curly brackets.

If a website refers to a business with a physical location, then LocalBusiness Schema is very important. It’s a subset of the broader “Organization” schema that indicates a business serving a particular area.

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "LocalBusiness",

"name": "Example Business",

"url": "https://www.example.com",

"telephone": "+1-206-555-1234",

"address": {

"@type": "PostalAddress",

"streetAddress": "123 Street",

"addressLocality": "Houston",

"addressRegion": "TX",

"postalCode": "77001",

"addressCountry": "US"

}

"sameAs": [

"https://www.facebook.com/examplebusinesshandle",

"https://www.instagram.com/examplebusinesshandle"

]

}

</script>In this schema, the name and phone number are provided. Address is the important added element, though additional data like “geo” for coordinates or “openingHoursSpecification” for business hours are provided. The “sameAs” section clarifies social media profiles in case businesses with the same name exist, meaning its especially important for AI to avoid mix-ups.

Lastly, a BreadcrumbList schema for larger websites directly tells both search engines and AI what the layout is. It lists out each step of a website hierarchy as a separate item and indicates the level through “position”.

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "BreadcrumbList",

"itemListElement": [

{

"@type": "ListItem",

"position": 1,

"name": "Home",

"item": "https://www.example.com/"

},

{

"@type": "ListItem",

"position": 2,

"name": "SEO",

"item": "https://www.example.com/seo/"

},

{

"@type": "ListItem",

"position": 3,

"name": "Blog",

"item": "https://www.example.com/seo/blog/"

}

]

}

</script>A different “name” entry is useful in case the url doesn’t clarify. Other properties used for each item include “image” for an associated image and a “description”.

Best Practices for Content

In addition to crawlability, the way content is written and where that content is placed is pivotal in helping AI both find and digest the information it seeks.

AIs break things down semantically, meaning websites should prioritize good keywords and clear content structure. AI also tends to base its results off of a question’s wording and place more emphasis on lower-ranking results if they are a better match. In summary, the page that gets picked is the one with the most relevance and closest-matching words.

The writing side of SEO has shifted more than the technical side with the advent of AI. That being said, many of the principles still apply. One such principle is comprehensible, direct content.

The gist is to write fewer words. Fewer words means content fits the writing style AI delivers to users. In addition, an 8th-grade reading level is the “sweet spot.” Fewer filler words and more summary of key information is ideal.

Beyond writing complexity, the order of information matters too. Answer questions and lead with answers. A user asks an AI model a question or asks to summarize a page. Simply giving AI the answers is a great strategy to get picked up. Prioritize answering what prospects ask about and put them at the top of the content.

Fundamentally, AI breaks down information by token. A token is a small unit of information the size of a short word used for text processing. When the AI grabs the fetched information, it usually comes in the form of cleaned up text derived from raw HTML. Then AI processes tokens and their relationships.

How to optimize for the token process? Put simply, break up text with small paragraphs/bullet points. This helps an AI understand content better. A key points summary at the top or bottom of content has a similar effect. Using this strategy makes the content looks more like the answer AI would give, thus it seems a more relevant source.

In terms of best keywords, AI differs from Google in that it prefers plain language. In other words, AI prefers language that matches its output to perceived credibility from using industry terms. “Short head” and “long tail” are SEO industry terms that refer to the specificity of content. A graph of keyword usage will show that plain words are harder to rank for but far more searched.

Reddit is by far the most used AI source. Reddit posts often have the answer to extremely specific or technical questions that top-ranking Google results don’t. As this indicates, a fantastic strategy for AI-optimized SEO writing is to use simple language to explain niche concepts.

Last is provenance. Provenance is just adding the date, author, and other relevant information to content. In WordPress, articles have this by default, but other content might not. Provenance is good for crawlability and appearing on Google’s results page snippets.

This is especially important for topics where being recent matters a lot, because content is not assumed to be new when its publish date is ambiguous. As an addition to date published, last updated is especially useful for signaling fresh content.

Machine-Friendly Media

AI models work by processing information through tokens. AI can’t really watch a video or look at an image. Therefore, providing text substitutes for media is a vital strategy in helping AI understand content and provide answers to its users.

Fewer websites have done this properly yet. In fact, nearly a fifth of the top one million homepages on the internet have missing alt text.

Despite this, AI relies heavily on alt text and video captioning to gather information. So a key strategy besides content and website crawlability is media accessibility. In particular, images and videos are the most common form of media on the internet.

If a website contains these media and they include things such as infographics, AI won’t be able to source them for a user request without further text data. Video titles and descriptions are readable, but the video itself is not.

Alt text is the best way to provide information about images to AI. Alt text has been historically important for Google as it is highly valued by the search engine. AI has places additional value on labeling images. Alt text can be edited on WordPress by accessing the Media Library or by editing an image’s properties directly. Otherwise, alt text appears as a property next to an image on HTML.

A green frog sitting in a pink rose. Source: unsplash.com

<img decoding="async" class="responsiveImg-czQTaZ" src="https://images.unsplash.com/photo-1518737496070-5bab26f59b3f?fm=jpg&q=60&w=3000&ixlib=rb-4.1.0&ixid=M3wxMjA3fDB8MHxwaG90by1wYWdlfHx8fGVufDB8fHx8fA%3D%3D" alt="Photo of a green frog sitting in a pink rose." width="449" height="417">

As an example of alt text, the above HTML line corresponds with the above photo. Images that are used purely for decoration should not contain alt text, and descriptions should be kept short and simple. A helpful guide posted by Google can be found here.

Alt text’s main function is screen reading accessibility. Therefore, appropriate titles/captions for images are also important, even if they are weaker SEO signals. Titles appear when hovering the mouse over an image. Captions are added text that describe an image. Together with alt text, they provide the most information about content.

Titles are an available property for every image. In WordPress, they can be accessed under advanced properties while editing posts or found below alternative text in the media library image viewer. Captions are accessible in the same WordPress menus. Captions must be added manually in websites that don’t use WordPress, as they are not a built-in HTML property of images.

In the case of videos, video transcripts are an additional step beyond title and description that often gets overlooked. Transcripts remain important for site readability while AI technology to read audio is being developed. Both search engines and AI models use transcripts to determine the content inside a video. They can be especially useful for tutorial or guide videos, because the content in those videos rank very well for “how to” questions. Tutorial videos also tend to show up a lot in AI Overviews.

Hover over the language entry in Subtitles column and click add to add subtitles to a Youtube video. Source: studio.youtube.com

If the website embeds Youtube content, then transcripts can be edited directly on Youtube. Go to Youtube Studio and click on the subtitles tab. Then click the add language button and add a desired subtitle language. Add a subtitle by going to the subtitles column and clicking the add button.

Subtitles are how Youtube gets an official transcript of a video. If no manual subtitles are present, Youtube sometimes auto-generates captions and uses them to create a transcript. Youtube’s automatic captions are not always indexed by Google and can have far lower SEO value.

If the videos are produced with scripts, then those scripts can also be optimized to provide better SEO value for the video’s transcript. Further, there are now various AI tools available to generate transcripts in many languages.

Then there are audio transcripts. A common example is a podcast episode page where a Soundcloud widget sits above a transcript of the podcast episode. Conference recordings or webinars are also great examples. The transcripts rank on Google or get picked up by AI and deliver the audio content to relevant users.

Lastly, PDFs are part of websites even if uncommon. PDF pages often get picked up by Google and put at the top of rankings for relevant searches. Even stray pages no longer normally accessible show up as results. AI tends to grab PDF links when the answer requires technical or academic information, or specific situational details such as financial reporting.

If PDFs aren’t meant to be accessible, then it’s a good idea to ensure so. The first way is through the following robots.txt disallow line.

/*.pdf$

Second, the X-Robots-Tag is an HTML tag that can be added to website pages to prevent indexing and crawling behavior. The X-Robots-Tag will be respected by Google only if the same page is not blocked through robots.txt.

Third, AI might still be able to access a PDF page if the web crawler ignores either. The only true way to protect a PDF page is to restrict access through a login or other means.

SEO strategists strongly recommend putting content in a website page, but there are some reasons to stay in PDF. Long-form reports, guides, and manuals are all expected to be shared as document files. For these cases, there are there are a couple best practices to make PDFs readable for both search engines and AI.

First is a text layer. If a pdf is scanned, then using an optical character recognition (OCR) program can quickly generate selectable text out of image data. Web crawlers cannot easily read a PDF consisting of images. Content strategies apply to a PDF’s readability as well.

Next are document metadata and properties. Usually the name of a PDF appears in the link, so the name can be optimized based on SEO keyword strategy to show up in results. Other properties like description and author get picked up as well. Adobe Acrobat has additional features for metadata such as tagging PDFs.

Lastly, an HTML version of the same document can be created and linked to using the canonical link tag. This tag can redirect a PDF link to a more digestible version for search engines. The canonical tag is a hint, and the link an AI chooses will depend. In other words, an AI might find a PDF link with a canonical tag and still pick the original link, dropping the easier-to-read HTML version. When picking just one, an HTML version is more valuable than optimizing the PDF itself.

Internal/external links, alt text, and other webpage strategies apply to PDFs. Lastly, what works for PDFs can also work for other text document formats such as DOC or DOCX.

How To Test AI Readability

With the many different strategies presented to boost a website’s visibility for AI, testing is important. There are many ways to test AI readability, ranging from simply asking an AI model to coding a proprietary AI agent to interact with the website.

First, simply asking an AI model relevant questions to the wesbite’s purpose can be a good indicator of how well it appears both before and after the use of readability strategies.

If a website pertains to a certain service or area, then ask a question like “are there any good CPA Firms near Houston TX?” Asking about the niche topic can show both the visibility of niche content in the website and if there are competitors in the topic’s domain.

Likewise, it’s important to note what AI actually says. It should repeat details like opening hours or contact information if they are included on a website. This is especially important for schema markups which indicate details invisible but accessible for a robot reading the HTML. In addition, repeating the prompts and noting any informational differences between answers could indicate an AI guessing part of the answer.

Broader questions such as “provide a rundown on entity tax considerations for 2025” show if the website is competitive for general topics. The sources used often match Google’s first page. However, niche content can make a page get sourced despite ranking lower.

Besides interacting with AI models, the “curl” command can be used in a Windows command terminal to provide information through the lens of a crawler.

curl -sS https://example.com/robots.txt curl -sS https://example.com/sitemap.xml | xmllint --format -

The two commands listed above can be used to readily check what a web crawler would see for both robots.txt and an XML sitemap. Errors are a sign of either an incorrect command or, more importantly, a problem with the website itself.

Besides testing accessibility, curl can also mask as an AI or a search engine by using its respective user-agent string.

curl -sI -A "Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; GPTBot/1.0; +https://openai.com/gptbot)" https://example.com curl -sI -A "Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; PerplexityBot/1.0; +https://perplexity.ai/perplexitybot)" https://example.com curl -sI -A "Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; ClaudeBot/1.0; +http://www.anthropic.com/bot.html)" https://example.com/some-page

The “-sI” can be made “-s” to show the full page results to spot any differences from how it shows up to users. There are many other ways to utilize the curl command for SEO purposes besides the commands mentioned here.

Conclusion

While the sheer number of different ways to optimize for AI readability may seem overwhelming, they boil down to two main components: having a good website layout and adding plenty of metadata. Website layout makes navigation easier, and metadata helps demonstrate to AI what a user sees.

In addition, most of the topics mentioned are already considered highly valuable from a traditional SEO perspective. AI readability is already impacted heavily by optimizing for search engine rankings.

If a website uses WordPress, then XML sitemaps, robots.txt, noindex settings, canonical tags, schema markups, and provenance are already included either officially or through SEO plugins. Content strategy and image/video/PDF accessibility are not included and should be the main point of focus.

If a website uses Cloudflare, then checking the AI crawler settings is highly recommended. As mentioned previously, this setting can be changed by going to the AI Crawl Control part of the Cloudflare dashboard.

As the use of AI for search continues to grow, so will the need to optimize for it. Luckily, foundational SEO still works well for AI. Businesses and websites that prioritize good website structure and plenty of metadata will be best positioned to stay competitive for both search engine and AI result ranking well into the coming years.

Connect with Us

Staying on the cutting edge of SEO in the AI landscape requires keeping up with changes as they happen. At Flashpoint Marketing, our team of SEO experts assists using new insights and best practices.